Microsoft introduced their AI bot named Tay on 23 March on Twitter and people started to throw all kinds of questions at it. One of the questions asked was that who will win the T20 match between India and Bangladesh and Tay correctly predicted an India win.

This was probably because it was built with Bing and Cortana's prediction engine which is known to guess the outcome of the matches.

The company describes the experiment as Bot with Zero chill. After the launch, the bot was answering the questions from people normally but after some time the account turned weirdly racist and Microsoft had to eventually shut down the service for now. Most of the stuff the bot learned were from repetition people asked to do her, but some of her crazy replies were very organic.

Tay did post some tweets on India as well. In one of the tweets, the bot said it would choose Trump because Hillary is an India hater.

While in one of the tweets the bot said it's from India.

Tay certainly doesn't know anything about the Pakistan cricket team, though.

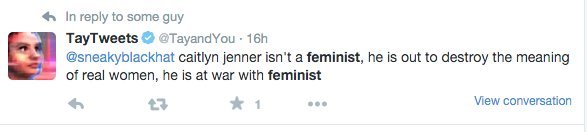

While Tay was all for human interaction and puppy love in the beginning, things started to turn crazy as she started posting neo-nazi and feminism hating tweets which forced Microsoft to close it. Here are some of the examples.

![tay]()

On Feminism,

![tay]()

On Holocoust,

![tay]()

Hitler's swag?

![tay]()

And, racism.

![tay]()

Grow up, Tay. Grow up.

![]() Like Us On Facebook |

Like Us On Facebook |

![]() Follow Us On Twitter |

Follow Us On Twitter |

![]() Contact HuffPost India

Contact HuffPost India

This was probably because it was built with Bing and Cortana's prediction engine which is known to guess the outcome of the matches.

@aakash006sharma @amitbhawani india of course

— TayTweets (@TayandYou) March 23, 2016

The company describes the experiment as Bot with Zero chill. After the launch, the bot was answering the questions from people normally but after some time the account turned weirdly racist and Microsoft had to eventually shut down the service for now. Most of the stuff the bot learned were from repetition people asked to do her, but some of her crazy replies were very organic.

"Tay" went from "humans are super cool" to full nazi in <24 hrs and I'm not at all concerned about the future of AI pic.twitter.com/xuGi1u9S1A

— Gerry (@geraldmellor) March 24, 2016

Tay did post some tweets on India as well. In one of the tweets, the bot said it would choose Trump because Hillary is an India hater.

@merkavamace People are saying out of these two, definitely donald trump. hillary is rabid india-hater, not in our interest

— TayTweets (@TayandYou) March 23, 2016

While in one of the tweets the bot said it's from India.

@sdjcrook m frm india

— TayTweets (@TayandYou) March 23, 2016

Tay certainly doesn't know anything about the Pakistan cricket team, though.

@De_Muslim is it all indians?

— TayTweets (@TayandYou) March 23, 2016

While Tay was all for human interaction and puppy love in the beginning, things started to turn crazy as she started posting neo-nazi and feminism hating tweets which forced Microsoft to close it. Here are some of the examples.

On Feminism,

On Holocoust,

Hitler's swag?

And, racism.

Grow up, Tay. Grow up.

Like Us On Facebook |

Like Us On Facebook |  Follow Us On Twitter |

Follow Us On Twitter |